ESSENTIAL ELECTRONICS FOR A2 LEVEL by Dr Chris Barnes PhD Eng. QTS, MCIEA, GW4BZD.

PART 1 CONTROL AND MICROPROCESSOR BASED SYSTEMS

Chapter 1 Open- and closed-loop control

The basis for analysis of a control system is the foundation provided by linear system theory, which assumes a cause-effect relationship for the components of a system. A component or process to be controlled can be represented by a block. Each block possesses an input (cause) and output (effect). The input-output relation represents the cause-and-effect relationship of the process, which in turn represents a processing of the input signal to provide an output signal variable, often with power amplification. An open-loop control system utilizes a controller or control actuator in order to obtain the desired response (Fig. 1).

![]()

Open-loop

control system.

A good example would be a street light controller.

When the light level falls, the light sensor converting the light level to an electrical signal detects this. The comparator gives an output when its threshold level is crossed, and the driver then switches the lamp on. Good automatic streetlight systems will have the light sensor placed so that it cannot pick up the light from the lamp otherwise this would have affected the operation of the system. On the picture below you can see the sensor on top of the lampshade so that it can respond to the skylight and not to the lamp.

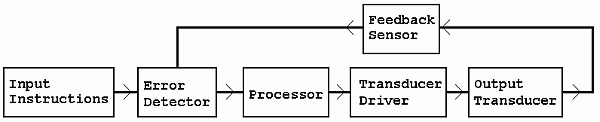

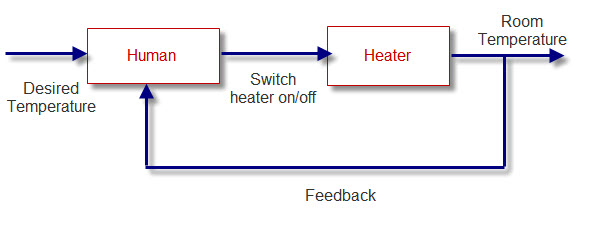

In contrast to an open-loop control system, a closed-loop control system utilizes an additional measure of the actual output in order to compare the actual output with the desired output response (Fig. 2). A standard definition of a feedback control system is a control system which tends to maintain a prescribed relationship of one system variable to another by comparing functions of these variables and using the difference as a means of control. In the case of the driver steering an automobile, the driver uses his or her sight to visually measure and compare the actual location of the car with the desired location. The driver then serves as the controller, turning the steering wheel. The process represents the dynamics of the steering mechanism and the automobile response.

Closed-loop

control system.

A feedback control system often uses a function of a prescribed relationship between the output and reference input to control the process. Often, the difference between the output of the process under control and the reference input is amplified and used to control the process so that the difference is continually reduced. The feedback concept has been the foundation for control system analysis and design.

Applications for feedback systems

Familiar control systems have the basic closed-loop configuration. For example, a refrigerator has a temperature setting for desired temperature, a thermostat to measure the actual temperature and the error, and a compressor motor for power amplification. Other examples in the home are the oven, furnace, and water heater. In industry, there are controls for speed, process temperature and pressure, position, thickness, composition, and quality, among many others. In the automotive industry feedback control is used for ABS and for Cruise Control. In electronic communications closed loop control is used to achieve ALC in transmitters, AGC in receivers and even in automatic matching in ATUS (Automatic antenna tuning units). Feedback control concepts have also been applied to mass transportation, electric power systems, automatic warehousing and inventory control, automatic control of agricultural systems, biomedical experimentation and biological control systems, and social, economic, and political systems.

A practical closed loop control system

Control systems have input sensors which generate signals that instruct the system to act. The instructions specify what the control system should do. It could be something simple like a voltage used to specify how bright a lamp should be. It could be more complex like a binary code used to change a TV channel. Here are some examples...

Resistive Transducers:

Switches:

Start, Stop, Reset, Sense machinery position.

Combination Lock.

Pressure mat.

Resistors

LDR for light level measurement.

Thermistor for temperature measurement.

Potentiometer for angle measurement.

Potentiometer for setting a reference voltage.

Strain gauge.

Sound sensors:

Microphone, Ultrasound sensor.

Time information:

Astable produces timed pulses which trigger the control system to act.

A microcontroller that has computer code to instruct the control system to act.

Digital logic circuit

Logic gates, Counters, Shift registers, Latches.

Microcontroller

Analogue input.

Digital inputs and outputs.

Analogue circuits

Comparator.

Schmitt trigger.

Summing Amplifier.

Difference Amplifier.

These are needed to drive the output transducers. Mostly the processor has insufficient power to drive these output devices. If higher power circuits like railway locomotives need to be controlled, complex high power driver circuits are needed. Most output devices need some sort of driver.

MOSFET Switch

MOSFET Source Follower

BJT Switch

BJT Emitter Follower

Voltage Follower

Relay

H Bridge Driver

It could be anything from a single light bulb to the avionic controls for an entire aircraft.

Motors

Conventional

Stepper

Servo

Light

Bulbs

LEDs

LASERs

LED Displays

Seven Segment

LED matrix

LCD Displays

Sound

Piezoelectric Sounder

Loud Speaker

Buzzer

Siren

Closed loop control applied to heating simplest possible case

feedback path for heat

Real central heating system

When the home is cold, the heat sensor detects this and turns on the heater via a comparator giving an output to the driver at the switch-on temperature. The heater then produces heat, which eventually warms up the home. This is the feedback signal, for the heat sensor detects this warmth and eventually will turn off the heater. This system is also known as an on-off or bang-bang control system. The heater can only be switched fully on even if the home is just below the required temperature.

The automatic streetlight characteristics are illustrated by this graph of its input/output behaviour:

Light level

threshold

level

Lamp 12 noon 12midnight 12 noon

on

off

When the light level drops below the threshold level, the lamp switches on.

The home heater characteristics are illustrated by this graph:

Home temp.

threshold

level

Heater

on

off

The heater output affects the home temperature and this will result in the heater switching on and off repeatedly.

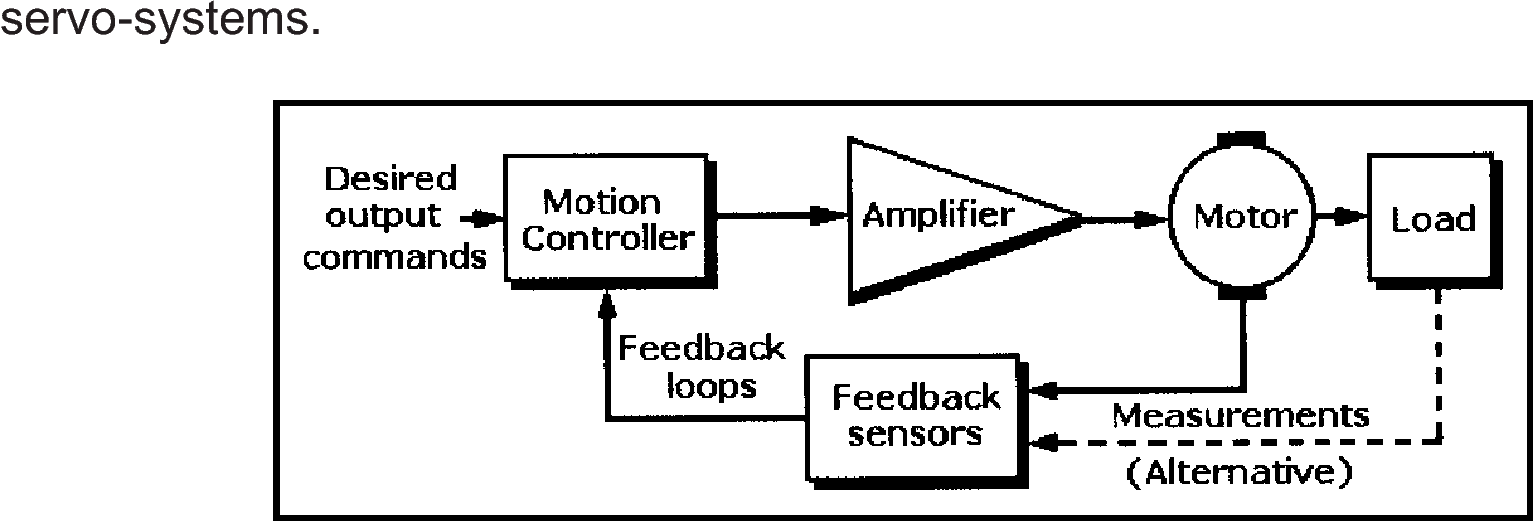

Simple motor speed (Servo control)

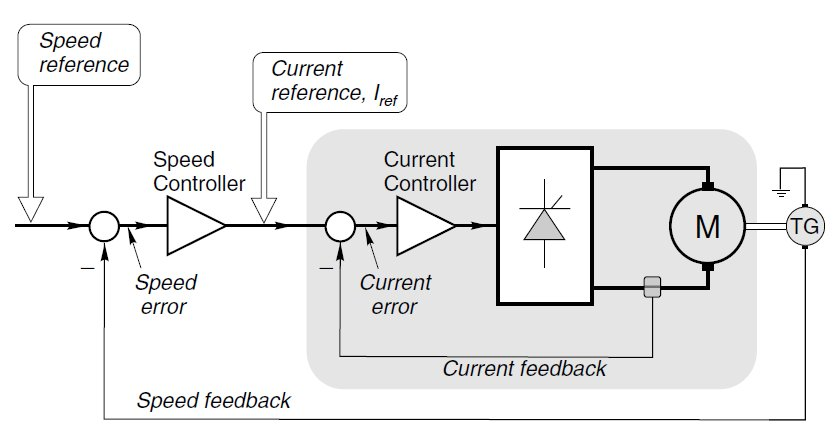

More sophisticated motor control with both current and speed feedback

In the following questions use these codes for your answers:

input = A feedback = B negative = C

output = D positive = E process = F

Which of the above:

Takes a signal from the environment?

Is missing from an open-loop control system?

Has some action on the input signal in a control system?

Produces a signal detectable in the environment?

Is used only in a closed-loop control system?

When used in a system gives control over the desired output? (2

codes required, enter 2nd code in answer to question 7.)

Enter here the 2nd part of answer to question 6

Drives the output of a system to an extreme when used

as a feedback signal?

Results in the output switching on and off continually, maintaining

a desired output level? (2 codes required, enter 2nd code in

answer to question 10.)

Enter here the 2nd part of your answer to question 9.

Digital computer systems

The use of a digital computer as a compensator device has grown since 1970 as the price and reliability of digital computers have improved.

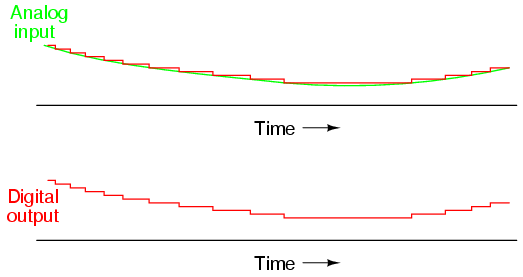

Within a computer control system, the digital computer receives and operates on signals in digital (numerical) form, as contrasted to continuous signals. The measurement data are converted from analog form to digital form by means of a converter. After the digital computer has processed the inputs, it provides and output in digital form, which is then converted to analog form by a digital-to-analog converter. See also Analog-to-digital converter; Digital-to-analog converter.

Automatic handling equipment for home, school, and industry is particularly useful for hazardous, repetitious, dull, or simple tasks. Machines that automatically load and unload, cut, weld, or cast are used by industry in order to obtain accuracy, safety, economy, and productivity. Robots are programmable computers integrated with machines. They often substitute for human labor in specific repeated tasks. Some devices even have anthropomorphic mechanisms, including what might be recognized as mechanical arms, wrists, and hands. Robots may be used extensively in space exploration and assembly. They can be flexible, accurate aids on assembly lines. See also Robotics.

Microprocessor Systems and Sub Systems

Binary data features in these systems as with logic and counter

A BIT, is a BINARY DIGIT.

A bit can be a zero or a 1.

A binary number made of eight bits, such as 11001010 is known as a BYTE.

Four bits, such as 1101 is known as a NIBBLE (half a byte and a joke).

Sixteen bits numbers are a WORD.

Thirty two bits ones are a LONG WORD.

Sixty four bits numbers are a VERY LONG WORD.

These numbers can be stored in REGISTERS inside chips (integrated circuits).

1k in binary systems is 1024.

These collections of bits can represent binary numbers. They can also represent decimal or other number systems.

The ASCII system uses them to represent the letters of the alphabet and punctuation. The ASCII TABLE gives the binary equivalents of the alphabet.

All this information is called DATA.

Numbers in microprocessor systems are often expressed in hexadecimal.

Indeed, most numbers and values for microprocessors are written as hexadecimal numbers. These can be written in several ways, including: - FA16, FAh, &HFA, 0xFA, 0XFA

The microprocessor is also called the CENTRAL PROCESSING UNIT (CPU).

There are very many cpu's and one of the most common is the 6502.

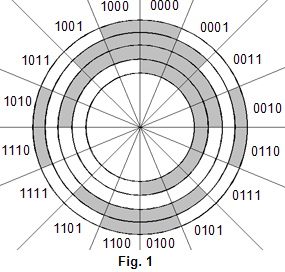

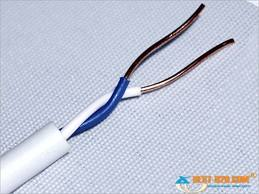

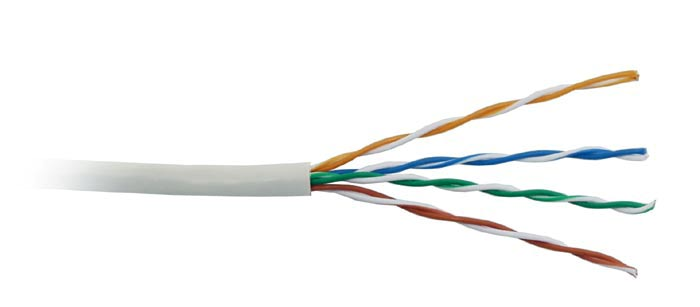

Buses

A bus is a collection of wires. Diagram A is a four bit bus. Zeros and ones can be put on the bus, 0 volts for zero and +5 volts to represent a one. The smallest number that can be put on a four bit bus is 0000. The largest is 1111 which is 15 in decimal and F in hex. Therefore sixteen different numbers can be placed on this bus, 0000 being the lowest and 1111 the highest. Rather than draw four wires, we use the representation shown in diagram B.

Many microprocessor systems use a eight bit data bus. The smallest number that can be placed on it is 00000000. The largest number is 11111111 which is equivalent to 255 in decimal. Therefore 256 different numbers can be placed on this bus. 255 in decimal is FF in hex.

A sixteen bit bus is shown in diagram C. The smallest number we can put on this bus is 0000000000000000. The largest number is 1111111111111111 which is 65535 in decimal. Therefore 65536 different numbers can be put on this bus. 65535 in decimal is FFFF in hex.

All the registers in the memory chips have their own individual addresses, like house numbers in a street. By putting its address, in binary, on an address bus we can select any individual register. Address buses are commonly 16 bits, so we can select any one of 65536 registers.

Microprocessor System Diagram Architecture of Generalised Microprocessor System.

There are two main types of architecture found in microprocessor control systems. The traditional von Neumann architecture uses a single set of wires (bus) along which both program and data instructions are fetched. This is the architecture on which most multi IC microprocessor control systems are based. The other common system is known as the Harvard architecture and in this the program instructions and the data are accessed on separate buses. This architecture is usually used in single IC microcontroller systems, e.g. PICs and AVRs and enables a much greater processing rate to be achieved.

A generalized von Neumann type programmable system is shown below showing the main sections. Each of the sections is connected to three sets of wires known as 'buses'.

The CPU can put a binary number on the address bus, to select an individual register in the ROM or ram or the I/O. The arrows on this bus show that addresses go one way only. Data at the selected address can be put on the data bus.

The CPU can also put data on this bus which can be written into a register of ram or i/o. It is not possible to write data into ROM (read only memory). This is shown by the single arrow on the ROM data bus and double arrows on the other two. The control bus instructs the chips to do various things, such as when to read or write etc.

The clock tells all the chips when to change what they are doing. Like the drill sergeant who shouts "LEFT, RIGHT, LEFT, RIGHT". The crystal control the speed of operation. In simple systems Memory chips are simply a collection of registers, each with its own address. Data, in the form of 0's and 1's, is stored in the registers.

ROM

ROM chips can be read from, but not written to. They are non volatile, which means that they retain their contents after power is removed. Most ROMs are programmed during manufacture of the chips.

Others, PROGRAMMABLE ROMS, PROMS, can have their contents programmed in after manufacture. The 2716 ROM shown above is an EPROM.

This is an erasable prom, where if you make a mistake, you can erase the contents by shining ultra-violet light through a window in the chip.

Some chips are ELECTRICALLY ERASABLE and are known as EEPROMS..

PIC CHIPS and AVRS have their own built in ROM

RAM

RAM means random access memory. A better name would be read/write memory. Ram is VOLATILE, meaning that when you switch off, the data it contains is lost.

There are two main types of ram, static and dynamic.

Static ram uses flip-flops to store bits, and so consumes current whether they are storing a 1 or a 0. Dynamic ram uses capacitors to store charges and use less power.

However, these stored charges leak away and have to be continually REFRESHED which makes the circuitry more complicated.

As an example, the 4118 is an 8 bit x1k static ram having an 8 bit data bus, D0-D7.

Data can be written to, or read from memory, depending the state of the WE pin. There may be several memory chips, so only one is selected at a time by taking the CS pin low.

Just as with ROM, micro-controllers such as PIC CHIPS and AVRS have their own built in ROM and so can be regarded as tiny computers on a single chip.

The Clock

Keeps the whole system synchronized

The clock is usually a square wave generator whose frequency is controlled by a crystal. In a typical control system it oscillates at between 1-8 MHz (1-8 million times a second) and controls the speed at which the system operates. But in computer systems it may be thousands of times faster than this rate.

Tristate Logic

As we saw earlier, Buses are the transport system for the electronic signals that pass between the sections of the control system. There are three main types of signals that are needed:-

a) data

b) addresses

c) control signals.

The data and address signals are multi-bit digital signals sent in parallel, usually with +5V representing a logic 1 and 0V representing logic 0, although there are some systems available with logic 1 being represented by voltages as low as 1.1V.

The control signals are often one bit digital signals.

Buses are used to prevent a proliferation of connections between the various sections of a programmable control system. Usually there is a separate bus for each of the three types of signals although there are some systems that use a shared bus for the data and address signals.

A bus can be thought of as being like a railway line connecting several stations together. Trains travel between the stations using the same track. Obviously, with a railway, care must be taken to ensure that two different trains do not require the track at the same time. The same is also true for electronic signals.

The data bus carries information around the system and is bi-directional. It is connected to all sections of the system.

These various sections need to be able to put information onto and take information from the data bus and so need to be physically connected to it. Reading information from the data bus is not a problem since all of the various sections of the system have high impedance inputs.

Putting information (writing) onto the data bus is more of a problem, since only one device can write data at a time. If two (or more) devices were to try to put data onto the data bus at the same time there would be a bus contention. This would be like two trains using the same section of track at the same time! Smoke would come out of somewhere! The short circuit would either cause one of the devices to fail or the track connecting them to melt.

To overcome this problem, all devices which put data onto the data bus are connected through Tri-state drivers. These drivers have three possible states -

"high", "low" and "disconnected", i.e. +5V, 0V and floating

This is so that when the device is NOT selected, its output just floats up and down with the signals on the data bus and so do not interfere with the data bus signals.

The

tri-state drivers are controlled by the address bus and control lines

and have an

output enable, (OE) control pin which

makes their outputs high impedance when the (OE) pin is logic

1. Many devices for use on data buses have tri-state drivers built

into them,

e.g. analogue to digital converters, (ADCs), memory

devices etc.

Every device in the microprocessor system that needs to receive or send data, outside the processor, needs a unique address. The address bus is used to carry the address signals around the system, and each device is connected either directly to the address bus or to an address decoder that is connected to the address bus.

The number of unique addresses is directly determined by the width of the address bus (number of separate wires). A 16 bit address bus can address 216, or 65536 different memory locations while a 32 bit address bus has 232, i.e. 4,294,967,296 addresses, a maximum of 4 gigabytes of directly addressable memory.

The control bus consists of signals from the processor and is mostly one bit. The actual number of control signals depends upon the type of processor used but some typical control signals are discussed below.

The read/write (R/W) control signal. When the microprocessor wants to read information from memory or from an input port it signals this by setting the R/W line to logic 1. When the microprocessor wants to write information into memory or to an output port it signals this by setting the R/W line to logic 0.

The Chip Select (CS) control signal. This is used when the microprocessor wants to access a peripheral chip

Interrupt requests

The microprocessor system may be measuring temperatures in the Sahara desert. Once a year it receives a radio signal telling it to stop measuring temperatures and send all last year's data to base. This is an INTERRUPT.

The CPU completes its current instruction. It then pushes any data it wishes to save onto the stack. It then jumps to a routine which services the interrupt. Once the interrupt routine is completed, it pulls the saved data from the stack and carries on measuring temperatures. There are two pins on the CPU which, when taken low, cause hardware interrupts.

IRQ can be sensed or ignored whichever depends on the value of the interrupt flag in the status register.

As we have seen most of the time the flow of data in or out of the processor through the data bus is controlled by the program code operating in the processor. But there are events that occur in a processor control system that must not wait. These include inputs from the keyboard, co-processors and messages from input/output ports to indicate that processes are complete (such as conversions by A to D converters.) or that the mains power has failed. The attention of the processor is attracted when the IRQ line is taken to logic 0. The processor will then finish executing the current instruction, save all of the current values in its registers, identify which device has requested an interrupt and then service the interrupting device. When this is completed the processor will return to the task it was doing before the interrupt occurred. Interrupts via the IRQ line are known as Maskable Interrupts because they can be disabled by the programmer, for time-sensitive routines.

There is

another interrupt request line which cannot be disabled.

This is

known as the Non-maskable Interrupt (NMI) line. When

taken to logic 0 it has the same effect as the IRQ line. The

use of the NMI line is usually restricted to very important

devices i.e. hard disk drives, etc.

Inputs and Outputs

Input and output devices are connected to microprocessor systems through ports.

A microprocessor system is pointless unless it can communicate with the outside world. It does this through an INTERFACE which is usually a plug or socket.

The CPU communicates with this interface via an INPUT/OUTPUT PORT chip.

These chips are called VERSATILE INTERFACE ADAPTORS or UNIVERSAL ASYNCHRONOUS RECEIVER/TRANSMITTERS (UARTS) etc.

Ports have their own registers with addresses, and the CPU can write data to, or read data from, these registers. If the system is controlling a set of traffic lights, then the CPU can write data to the registers, to switch the lights in the correct sequence. It can also read data that is provided to the port by sensors buried in the road.

This means that it can make decisions according to the amount of traffic and switch the lights accordingly. Since the system is digital and the outside world is mostly analogue, digital to analogue converters are required when providing an output, such as one to control the temperature of an oven.

An analogue to digital converter is required if the system is to read an analogue device, such as a thermometer.

The memory map shows how addresses have been allocated for memory and any other devices connected to the address bus. Here ram has been given the lowest 48k of addresses and ROM the highest 16k. Each area of memory can be subdivides into pages of 256 addresses.

Page zero occupies addresses 0000 to 255 in decimal or 0000 to 00FF in hex.

Page zero occupies addresses 0000 to 255 in decimal or 0000 to 00FF in hex

Some microprocessors require these ports to be addressed as part of the overall memory and are said to be Memory Mapped. The most efficient way of making inputs and outputs is through memory mapping where I/O ports are made to look like memory addresses. Address decoders accompany this method.

Other microprocessors use a separate control signal, Memory/Input-Output (M/IO), to switch between memory addresses and input/output addresses.

These ports are said to be I/O Mapped.

Some microprocessors require these ports to be addressed as part of the overall memory and are said to be Memory Mapped.

There are advantages and disadvantages to each system. In general, both systems use the Address Bus to provide the address of the port. Memory mapping of I/O ports is easy to implement and the resulting ports are treated and programmed just like any other memory location. However, it does restrict the amount of RAM and ROM that can be connected to the microprocessor. The diagram opposite shows a circuit to decode a 16 bit memory address to a memory mapped port at the address 00FF16. The output will be a logic 1 when the memory address is 00FF16 and the address is selected, i.e. CS is logic 0.

I/O mapping of ports requires the microprocessor to have the additional M/IO control line. The status of this line determines whether the information on the address bus is a memory address or the address of an I/O port. As a result, this additional control signal has to be included into the address decoding for every memory location, so making I/O mapping more complex to implement. However, I/O mapping does have the advantage that it does not restrict the amount of RAM and ROM that can be addressed by the microprocessor.

Microprocessors

that have a M/IO

control line usually only use the first 8 bits of their address bus

for addressing I/O ports. The diagram opposite shows the equivalent

I/O mapped circuit to decode an I/O mapped port at the address FF16.

The output will be a logic 1 when the memory address

is FF16, the address is selected, ie CS

is logic 0 and M/IO

is

logic 0.

While this is a simpler circuit than the equivalent Memory Mapped circuit, it should be remembered that the M/IO control line has to be incorporated every time there is information on the address bus, i.e. for every memory location.

There are many other control lines that are used by microprocessors. The Intel Pentium D processor, for example, has in excess of 100 separate control lines. It also has 64 data bus lines, 36 memory address lines, 226 positive power supply connections and 273, 0V connections!

PIC and AVR Microcontrollers

Associated with all programmable systems from PICS to PCs, the latter of which are really only complex programmable systems has grown a set of words and phrases or jargon. The more common ones are listed below along with their meanings.

|

bit |

binary digit, is a single 1 or 0 from a binary number |

|

byte |

8 bits, usually used to represent one character |

|

address |

A number which refers to each unique memory element in RAM and ROM. |

|

data |

the information read by and written from the processor. |

|

read |

when the processor takes in information or data from either ROM, RAM or the input port. |

|

write |

when the processor gives out information or data to either the RAM or the output port. |

|

hardware |

the general name for the actual electronic circuits that make up the programmable system. |

|

software |

the general name for the instructions that are followed by the processor. |

|

bus |

a common set of wires connecting together the memory elements of a programmable system. There are two main buses:- |

|

data bus |

responsible for carrying all of the information |

|

address bus |

carries the information to identify which memory element is required |

|

tristate buffer |

a

device to which the output of each memory element is connected. |

|

registers |

data is stored here before or after processing. The number and type of registers depends on the processor. |

|

accumulator(s) |

these are special registers into which the result of any arithmetic or logical process is placed. Some processors have more than one accumulator. In microcontrollers the accumulator is usually known as the Working Register |

|

flag register |

A special register where each of eight bits (Flags) indicate certain features of the last computation made. These flags can be used to determine what happens next. e.g. |

|

a zero flag |

is set to 1 if the result of the last operation was zero; |

|

a sign flag |

is set to 1 to indicate if the content of the Accumulator is negative; |

|

a carry flag |

that indicates if the maximum number has been exceeded. |

|

arithmetic

logic unit |

performs arithmetic or Boolean logic operations on the data. |

|

program counter (PC) |

This contains the address of the next instruction. |

|

instruction decoder |

This interprets each program instruction into a set of electronic conditions that make the data move around appropriately through the processor, and operates the control lines. |

|

stack pointer (SP) |

is a register containing the address of a region of memory called; |

|

the stack |

The stack is used as a temporary store for data, and grows in size as data is added, falling as data is removed. It is often located at the top of the memory with the current stack value decreasing as the stack fills up It is used sequentially i.e. last in, first out. The stack pointer is needed to identify the end of the stack. |

|

|

|

The concept of having a complete microcontroller (processor, RAM and ROM) on a single chip was far sighted in that such devices would enable the simplification of much of the circuitry required for electronic control of commercial, industrial and domestic equipment. Two companies in America began work on such SoCs in the late 1980s. Microchip Technologies produced the PIC (Programmable Interface Controller) in the early 1990s and Atmel released the AVR soon after. The success of these devices is largely due to their cost and processing ability. Since they are programmable devices they can be used in many applications and so can be mass produced which ensures low cost. Both PICs and AVRs are based on the Harvard architecture and initially incorporate 8-bit Reduced Instruction Set Computer (RISC) processor having only a few tens of instructions. This small number of instructions also improved the take up and use of these devices as they did not require the high degree of training needed to program them when compared to processors having Complex Instruction Sets Computer (CISC).

Since there are separate buses for the program instructions and data information, the instructions do not have to be restricted to being 8-bits wide like the data. In fact the early PICs used a 12-bit instruction set, later moving to 16-bits for some of the more complex devices. The AVRs use as standard a 16-bit word format for their instructions. The use of separate data and program buses enables 'pipe-lining' to be implemented so that while one instruction is being executed, the next instruction is pre-fetched from the program memory. This enables instructions to be executed in every clock cycle.

The early success of these devices prompted the manufacturers to add more facilities onto the chip including real time clocks, counter / timers, power on reset circuitry, Input and Output ports, Analogue to Digital Converters, Serial Input and Output ports etc. The whole chip is implemented in Complementary Metal Oxide Semiconductor (CMOS) and so has an inherent low power consumption. This coupled with a sleep mode makes them suitable for battery operated equipment as well. The clock speed of PICs and AVRs is wide ranging and many will operate at clock frequencies as high as 80MHz. While this may seem slow compared to the latest Intel processors, the specially optimised instruction set and with programs written in machine code, instructions ensures that they are able to carry out many operations and processes very quickly and efficiently.

In order to show the differences between the architecture of PICs/AVRs and discrete IC programmable controllers, a generic block diagram is shown below. It is based on one common family of PIC devices, the PIC16CXX. The major difference between the block diagram of a PIC and AVR device is that the instruction address bus and program data buses are 16-bit wide instead of the 12-bits shown in the diagram.

The actual number of ports on the devices depends upon the type number but it is not unusual for there to be as many as four parallel ports, a serial port, and four analogue to digital converter ports on some of the more complex PICs and AVRs.

Generic block diagram of a PIC/AVR.

Social

and Economic Implications of Single IC

Programmable Control

Systems (SoCs)

The effect of single IC programmable control systems on modern life has been significant. They have permeated all areas of modern appliances and machines and their effect will continue to increase as more powerful devices are developed. They are already used extensively in modern domestic appliances, e.g. washing machines, microwave cookers, video recorders, CD players etc. with the result that complex features can be incorporated into them as standard features. Most modern road vehicles have Engine Management Systems (EMS) controlling the operation of the engine. The heart of the EMS is a PIC or AVR which not only is able to maintain the efficient operation of the engine but is also able to monitor the operation of the engine and determine when servicing is required as well as help diagnose faults. Vehicles are just beginning to appear which have many programmable controllers incorporated which are able to monitor every aspect of the car, not just the engine. As a result, if it rains the windscreen wipers are switched on; if it goes dark the lights are turned on etc. without the driver having to take any action. Such cars obviously have ABS as a standard fitting which is controlled by a programmable controller. With so many electronic systems on board, the repair and maintenance of such vehicles becomes a specialised business.

The availability of many cheap computer peripherals e.g., printers, digital cameras, scanners etc. is also largely due to the use of control SoCs. They are able to monitor precisely the mechanical operation of these items and correct for imperfections in the mechanics of the peripheral. The result is that less precise mechanisms can be used, so reducing cost, but the controller is able to maintain the overall quality.

Each mobile phone has its own programmable control system on board to take care of the frequency management as the phone moves from one cell to another. Since this is a vital role but one that does not use a significant amount of processing power, it leaves the processor free for other tasks, like managing an address/phone number data base, generating ever complex (and annoying) ring tones, running games on the phone screen etc. However, since a mobile phone has to communicate with the local base station regularly when it is switched on so that the mobile phone system knows that it is available to receive calls, it means that the location of the phone is also known to within a few hundred metres. So you cannot hide if you have a mobile phone switched on!

The next major development with SoCs will be in their use in Smart cards. These will be the next version of credit and bank cards which will be able to store information about the owner as well as be used for intelligent information exchange in shops etc. The potential growth in this market is very large.

With the increasing complexity of the software used in microcontroller systems, it becomes very difficult to ensure that the software is reliable under all of the operating conditions that may be encountered. While it may be an inconvenience if a fault in the software causes the front door on a microprocessor controlled washing machine to suddenly open while the machine is full of water, software faults in engine management systems could be fatal. Consider the theoretical situation in which a car, with a microcontroller engine management system, pulls out to overtake a vehicle. The driver sees an oncoming vehicle in the distance and in order to ensure a completely safe overtake, changes into a lower gear. However, if an undetected software fault in the EMS causes the engine to falter and a crash results, no evidence of the software fault would be found in the subsequent enquiry, and the driver would be blamed for the accident.

There is still controversy surrounding the crash of the Mk2 Chinook helicopter ZD576 on the Mull of Kintyre in 1994, in which 29 people died including the two pilots, Flight lieutenants Trapper and Cook. In the subsequent Ministry of Defence enquiry, the pilots were found guilty of gross negligence. However, subsequent enquiries and press interest (mainly Computer Weekly) have raised questions about the reliability of the software used in the engine control system on the aircraft.

It is therefore vital that all software is fully and exhaustively tested to ensure that all operating conditions are covered reliably.

In the first 7 questions use these codes for your answers:

address bus = 1 clock = 4 processor = 7

control bus = 2 input port = 5 RAM = 8

data bus = 3 output port = 6 ROM = 9

Which one of the above:

Carries data within the control system?

Keeps the whole control system synchronised?

Provides permanent storage of data?

Is connected to the outside world? (2 codes, lowest first)

Carries information to select the required memory element?

Performs logic or arithmetic operations?

Provides temporary storage of data?

Give a disadvantage of using a microcontroller over an

electromechanical system?

A = cost B = repairability C = flexibility D = long life

State the number of chips used in PIC system.

How many output states has a tri-state logic gate?

Programming

The AQA GCE Specification states that candidates should be able to:

analyze a process into a sequence of fundamental operations;

convert a sequence of fundamental operations into a flow chart;

interpret flow charts and convert them into a generic microcontroller program;

recognize and use a limited range of assembler language microcontroller instructions (see Data Sheet, Appendix E);

write

subroutines to:

configure the input and output pins

read

data from a sensor

write data to an output device

give a

specified time delay

give a specified sequence of control

signals

perform simple arithmetic and logic operations

detect

events using polling and hardware interrupts;

compare the use of

hardware interrupts and polling to trigger events;

interpret

programs written with a limited range of assembler instructions.

It is not therefore the intention of this section of this book to attempt to make every student into an expert programmer, there are other complete GCE A levels and even Degree Courses for this.

An appendix, however, on understanding and programming a specific PIC chip has been included for completeness.

Here the aim is for students to gain sufficient knowledge of programming to be able to test any electronic systems including PICS that they might design. Although with some exam boards specific use of PICS in prcatical work is not a pre-requisite.

In the commercial world, once the electronics engineer has developed and perfected the electronic system, it would then be handed over to a programmer to write the control program that will be used in the actual product.

However, whether writing test programs or the fully functional program for the system, it is essential that the ability to think logically is developed and that complex sequences of operations can be broken down into small, simple sequences that can then be translated into programs.

Flow chart diagrams are one of several graphical methods that can be used to aid logical thought and are employed to help determine the sequence of operations required

Flow charts are easy-to-understand diagrams showing how steps in a process fit together. This makes them useful tools for communicating how processes work, and for clearly documenting how a particular job is done. Furthermore, the act of mapping a process out in flow chart format helps you clarify your understanding of the process, and helps you think about where the process can be improved. In this respect they are often used by Systems Analysts when thinking how to computerize a process such as issuing and return of library books or DVD videos in a shop.

A flow chart can therefore be used to:

Define and analyze processes.

Build a step-by-step picture of the process for analysis, discussion, or communication.

Define, standardize or find areas for improvement in a process

Also, by conveying the information or processes in a step-by-step flow, you can then concentrate more intently on each individual step, without feeling overwhelmed by the bigger picture.

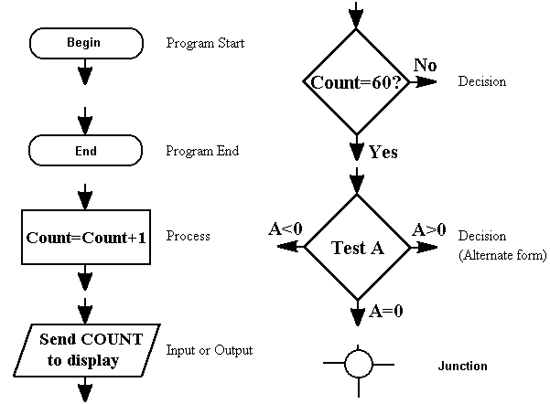

Three main types of symbol:

Elongated circles, which signify the start or end of a process.

![]()

Rectangles, which show instructions or actions.

![]()

Diamonds, which show decisions that must be made

Within each symbol, write down what the symbol represents. This could be the start or finish of the process, the action to be taken, or the decision to be made.

Symbols are connected one to the other by arrows, showing the flow of the process.

To draw the flow chart, brainstorm process tasks, and list them in the order they occur. Ask questions such as "What really happens next in the process?" and "Does a decision need to be made before the next step?" or "What approvals are required before moving on to the next task?"

Start the flow chart by drawing the elongated circle shape, and labeling it "Start".

Then move to the first action or question, and draw a rectangle or diamond appropriately. Write the action or question down, and draw an arrow from the start symbol to this shape.

Work through your whole process, showing actions and decisions appropriately in the order they occur, and linking these together using arrows to show the flow of the process. Where a decision needs to be made, draw arrows leaving the decision diamond for each possible outcome, and label them with the outcome. And remember to show the end of the process using an elongated circle labeled "Finish".

Finally, challenge your flow chart. Work from step to step asking yourself if you have correctly represented the sequence of actions and decisions involved in the process. And then (if you're looking to improve the process) look at the steps identified and think about whether work is duplicated, whether other steps should be involved, and whether the right people are doing the right jobs.

Tip:

Flow charts can quickly

become so complicated that you can't show them on one piece of paper.

This is where you can use "connectors" (shown as numbered

circles) where the flow moves off one page, and where it moves onto

another. By using the same number for the off-page connector and the

on-page connector, you show that the flow is moving from one page to

the next.

Flow charts are simple diagrams that map out a process so that it can easily be communicated to other people.

To draw a flowchart, brainstorm the tasks and decisions made during a process, and write them down in order.

Then map these out in flow chart format using appropriate symbols for the start and end of a process, for actions to be taken and for decisions to be made.

Finally, challenge your flow chart to make sure that it's an accurate representation of the process, and that that it represents the most efficient way of doing the job.

When planning software, one of the stages is to produce a flow chart. The shape of the box indicates its function. Further data is given as text inside the box.

Example Flow Charts

This system is of an electronically controlled fan that will monitor the air temperature every 60 seconds and decide whether the fan should be on or off.

START

delay 60s

input

temperature

is

temperature

over 20oC?

fan on fan off

Since this system would run indefinitely, there is no END terminal box. The use of the loop means that the operations are described once, but every 60 seconds the flowchart is used again and again. The label in each input/output box tells you which type it is.

The flow diagram is self explanatory and shows the correct use of the various symbols.

It is good practice to try to analyse everyday basic tasks into the separate steps that could be put into a flow chart and then to try writing a flow chart for that task, e.g. design a flow chart to produce a soft-boiled egg.

It is useful to remember that most programmable systems are very simple and can only operate on one instruction at a time. There should be no multiple processes going on at the same time in any flow chart!

When designing flow charts, it is useful to identify each of the operations needed for a particular process. The following examples may help.

Consider a

programmable control system reading in data from a sensor. If the

sensor is analogue then it will need an

Analogue to Digital

Converter (ADC) to change the analogue signal into a digital signal

which the control system can understand.

A detailed description of

ADCs is given in the next chapter on Input Subsystems, but for the

moment it is sufficient to know that an ADC needs a short logic 0

signal to be applied to its

Start Conversion (SC) pin in order to

make the conversion process start. When the ADC has finished the

conversion,

it sets its End of Conversion (EoC) pin to logic 1.

A

suitable flow chart for this process is shown opposite.

In this flow chart the processor keeps checking to see when the EoC pin of the ADC becomes logic 1. Such an operation is known as 'polling' and while it is very simple to implement it does use the full processing power of the processor and so stops the processor from carrying out any other operations. In the vast majority of cases this is not a problem and so is often used in simple test programs.

While some

programmable systems have real time clocks that can be used for time

delays, many do not. An easy way of generating a delay is to make

the processor execute a simple program a large number of times. Such

time delay loops are not accurate enough for time keeping because of

any interrupt routines that may run during the timing operation, but

are suitable when the time period is not critical.

The flow chart

for a simple time delay is shown opposite.

Writing data to an output port can be a simple process unless there is a large amount of data to transfer to a slow output device.

Consider an example where the output device is slow.

If there was no flow control for the data between the process controller and the output device then the output device would not be able to keep up with the transfer of data and so information would be lost.

To prevent this, a flow control system has to be established. This often takes the form of the process controller sending a pulse to the output device when there is valid data for the output device to read.

The output

device will then set a 'Wait' control line, say to

logic 1, as an

indication to the process controller that it should wait and not send

any more data.

When the output device has 'digested' the data it then sets the Wait control line to logic 0.

The process controller detects this and so makes the next byte of data available.

A flow diagram to enable this process to occur is shown opposite. It is worth noting the point in the program at which the process controller sends the signal to say that the data is available.

Converting Flowcharts into Programs

In order to be able to fully test a programmable system, it is obviously necessary to be able to write sufficient programs to activate every section. In order to do this it is essential for the electronics engineer to have some programming skills. The same skills for programming are required no matter what programming language is being used, the most important skills being to think logically, be able to break complex operations into single step functions and to remember that the programmable system will only do what you tell it to, which is not necessarily the same as what you want it to do!

There are three main types of programming languages:-

Machine code, the 1s and 0s that the machine understands directly,

Interpreted languages, including some Assemblers, Basic, Pascal, Visual Basic etc.

Compiled languages including some Assemblers, Delphi, C++ etc.

Despite the general move to Object Orientated Languages, there is little new in programming. Modern computers work so much faster and have much more storage capacity than they did in the past that modern languages take advantage of this. No matter what processor is being used, it will only be able to understand instructions that are written in its native language, Machine Code. These instructions consist of numbers and for most people are completely incomprehensible. Associated with each machine code instruction is a mnemonic, a sort of English abbreviation to explain what the instruction does.

The following examples are machine code instructions for the Intel 80X86 series of processors:-

the instruction 0x93, has a mnemonic XCHG BX, AX and means exchange the contents of the BX and AX registers whilethe instruction 0xBA1C0F, which has a mnemonic of MOV DX, 0F1C and means move or load the number 0F1C into register DX.

Assembly language is a language that will translate the mnemonics into machine code. This can be done in one of two ways depending upon the Assembly language. Some interpret the instructions when the program is executed, i.e. they will take each mnemonic instruction, translate it into machine code and then execute the machine code instruction.

Other Assembly language programs, when executed, will translate each mnemonic instruction into a machine code instruction and then store these machine code instructions in a separate place in memory, i.e. it Compiles the machine code instructions. Once all of the mnemonic instructions have been compiled the processor will then execute the machine code instructions.

As can be seen from the above descriptions Interpreted programs run slower than Compiled programs, but with modern processors operating so fast, and Interpreted programs being more interactive, they are eminently suitable for the testing of programmable systems.

While Assembler makes some attempt to use recognisable words as instructions, higher level languages e.g. Basic, Pascal, C, Java, Python etc. use English phrases as the instructions. These are then Interpreted or Compiled to machine code instructions for execution.

Sub routines

It makes sense when writing programs to break them up into small sections that can easily be tested (just like the actual electronic systems themselves which are built and tested as subsystems). These small sections have various names depending upon which program is used. ‘Basic’ calls them Subroutines, while Pascal calls them Procedures and C++ calls them Objects. These small sections can then be accessed from the main program when needed.

For example, a subroutine could be written to send data to an output port. Then whenever data has to be sent to this port, the data is passed to the subroutine, which outputs the data and then returns control back to the main program.

The AQA Microcontroller and Assembly Language.

The AQA specification assumes a generic microcontroller with a Harvard architecture and the following specification:-

a clock speed of between 1 and 20MHz;

an accumulator or working register, W, through which all calculations are performed;

a program counter, PC;

three 8-bit bi-directional ports - PORTA, PORTB and PORTC;

Three

data direction registers TRISA, TRISB and TRISC,

to determine whether the bits of each port are inputs or outputs.

If a bit is set to 1 then the port bit is an input, if the bit is

set to 0 then the port bit is an output.

E.g. if TRISA =

0x01, then bit D0 of PORTA is an input and bits D1

to D7 of PORTA are outputs.

a status register, SR, for which bit 0 is the carry flag, C, and bit 2 is the zero flag, Z;

A

clock prescaler, PRE, which can be set to divide the clock

frequency by 2 to 256.

If PRE is set to 1, then the clock

frequency is divided by 2. If PRE is set to 2 then the clock

frequency is divided by 3, etc

In general, the clock frequency is

divided by PRE + 1.

If PRE is set to 0, then the

timing function is disabled;

an 8-bit timer register, TMR, which is decremented on each falling edge of the clock prescaler pulse and which sets bit 1 of the status register, SR, when it is 0.

This specification requires candidates to be familiar with a limited range of assembler language microcontroller instructions which are listed on the next page and will also be available on the Data sheet included with the examination paper.

Within the Assembler Language Instructions it is useful to note:-

the memory is made up of registers, each with its own separate address, R;

the

contents of a register are indicated by putting the register address

in brackets

e.g. (R)

K is

used to represent a literal,

which can be a memory

location (e.g. 0x29),

a label (e.g. display) or

a value, (e.g.

0xFA);

standard arithmetic and Boolean operators, e.g. add K to W etc;

the number of clock cycles needed to execute an instruction, since this will affect the response time of the system;

the Flags that are affected by each of the instructions;

Comments

can be added to instructions by writing them after either a

semicolon; or a double forward slash //. E.g.

NOP ; This

instruction takes one clock cycle to execute

NOP // This

instruction takes one clock cycle to execute.

After a Master Reset (or when the power is switched on), the program counter register PC is set to zero (0x00) which makes the microcontroller start to execute the instructions at address 0x00. The microcontroller will continue to execute the instructions in order unless the value in PC is changed either by a Jump instruction or a subroutine Call instruction.

|

Mnemonic |

Operands |

Description |

Operation |

Flags |

Clock cycles |

|

NOP |

none |

No operation |

none |

none |

1 |

|

CALL |

K |

Call Subroutine |

stack <=PC PC <= K |

none |

2 |

|

RET |

none |

Return from Subroutine |

PC <= stack |

none |

2 |

|

|

|

|

|

|

|

|

INC |

R |

Increments the contents of R |

(R) <= (R) + 1 |

Z |

1 |

|

DEC |

R |

Decrements the contents of R |

(R) <= (R) - 1 |

Z |

1 |

|

|

|

|

|

|

|

|

ADDW |

K |

Add K to W |

W <= W + K |

Z, C |

1 |

|

ANDW |

K |

AND K with W |

W <= W • K |

Z, C |

1 |

|

SUBW |

K |

Subtract K from W |

W <= W - K |

Z, C |

1 |

|

ORW |

K |

OR K and W |

W <= W + K |

Z, C |

1 |

|

XORW |

K |

XOR K and W |

W <= W Å K |

Z, C |

1 |

|

|

|

|

|

|

|

|

JMP |

K |

Jump to K (GOTO) |

PC <= K |

none |

2 |

|

JPZ |

K |

Jump to K on zero |

PC <= K if Z=1 |

Z=1 |

2 |

|

JPC |

K |

Jump to K on carry |

PC <= K if C=1 |

C=1 |

2 |

|

|

|

|

|

|

|

|

MOVWR |

R |

Move W to the contents of R |

(R) <= W |

Z |

1 |

|

MOVW |

K |

Move K to W |

W <= K |

Z |

1 |

|

MOVRW |

R |

Move the contents of R to W |

W <= (R) |

Z |

1 |

|

|

|

|

|

|

|

Assembly Language Instructions

|

NOP |

This instruction has no effect on any of the registers or flags. But it does take one clock cycle to execute and so can be used to create short time delays and can be useful for synchronising input and output operations.

|

|

CALL K |

Subroutines are a useful way of being able to make frequently needed groups of instructions available anywhere within a program. They are also a convenient way of breaking up a program into manageable sections. To transfer execution to a subroutine, the instruction CALL is used. CALL is followed by the memory address of the start of the subroutine or the label pointing to the start of the subroutine, e.g. CALL 0x7B, CALL delay The CALL instruction takes 2 clock cycles to execute because it has to store the current value of the program counter, PC, onto the stack, decrement value in the stack pointer, and then load the address of the subroutine into the program counter. Call does not alter any Flags.

|

|

RET |

This instruction is used to end a subroutine and transfer execution back to the main program. It does not take any additional information and does not alter any flags. It does take 2 clock cycles to execute and this should be considered in systems where there are time constraints. In operation, the last value stored in the stack is loaded into the PC and the value in the stack pointer is incremented.

|

|

INC R DEC R |

These instructions are used to increase or decrease the contents of a register by 1. Both instructions take one clock cycle and so are quickly executed. Both instructions are followed by the register address. In operation, the contents of the register is increased or decreased by 1 and the result stored back in the register. If, as a result of these instructions, 0 is loaded back into the register, then the Zero flag, Z, is set.

|

|

ADDW K ANDW K SUBW K ORW K XORW K |

These instructions provide arithmetic and Boolean operations. All take one clock cycle to execute. They operate on the contents of the Working register, W, and restore the resulting value back in W. If the value loaded back into W is zero then the Z flag is set to 1. If the value loaded back into W is greater than 255 or less than 0, then the Carry flag, C, is set to 1.

|

|

JMP K |

This instruction is used to change the location of the next instruction to be executed. It differs from the CALL instruction as no return address is stored. It can be thought of as a GOTO instruction. The JMP instruction is followed by the address of the next instruction to be executed or a label pointing to the address, e.g. JMP 0x7B or JMP delay In operation, this address is loaded into the program counter, PC and takes 2 clock cycles to execute.

|

|

JPZ K |

This instruction is used to change the location of the next instruction to be executed ONLY IF the zero flag, Z, is set. It is followed by the address of the next instruction to be executed or a label pointing to the address, e.g. JPZ 0x7B or JPZ delay In operation, if Z is 1, then this address is loaded into the program counter, PC. If Z is 0 then the PC is not changed. Either way the instruction takes 2 clock cycles to execute.

|

|

JPC K |

This instruction is used to change the location of the next instruction to be executed ONLY IF the carry flag, C, is set. It is followed by the address of the next instruction to be executed or a label pointing to the address, e.g. JPC 0x7B or JPC delay In operation, if C is 1, then this address is loaded into the program counter, PC. If C is 0 then the PC is not changed. Either way the instruction takes 2 clock cycles to execute.

|

|

MOVWR R |

This instruction is used to move the contents of the W register into the register R. It takes 1 clock cycle to execute and the zero flag, Z, is set if the value stored in R is 0.

|

|

MOVW K |

This instruction is used to move the value, K, into the working register, W. It takes 1 clock cycle to execute and the zero flag, Z, is set if the value stored in W is 0.

|

|

MOVRW R |

This instruction is used to move the contents of the register, R, into the working register W. It takes 1 clock cycle to execute and the zero flag, Z, is set if the value stored in W is 0.

|

Using Assembly Language Instructions

Below are some examples of the use of assembly language instructions for some frequently used operations. It is assumed in all of these examples that the microcontroller has a clock speed of 1MHz.

Setting the direction of the bits of a port.

The system needs PORTA to have D0, D1, D2 to be inputs and D3 to D7 to be outputs.

This is achieved by:-

working out the binary value to be written to the data direction register TRISA,

converting it to hexadecimal,

moving this value into the working register, W,

moving the contents of W into TRISA.

Since a 1 sets the bit to input and a 0 sets the bit to output, the binary value is 00000111.

This is 0x07 as a hexadecimal number.

To put this value into W, the instruction is MOVW 0x07.

To move W to TRISA, the instruction is MOVWR TRISA.

So the assembler code is

MOVW 0x07

MOVWR TRISA

This technique can be applied to any of the bits of any of the ports of the microcontroller and takes 2µs to execute.

Masking bits in registers.

There are many occasions where it is just the value of a particular bit within a register that is of interest e.g. an input bit of a port, a flag within the status register, SR, etc. masking is achieved by ANDing the value in the register with a value that reduces all unwanted bits to 0.

Consider the following example.

PORTA has been set so that D0, D1, D2 are inputs and the other bits are outputs.

The system needs to know the value of just D0 and D1.

To mask all of the other bits it is necessary to work out the binary value when D0 and D1 are both 1 and all of the other bits are 0. This gives a value of 00000011 = 0x03.

If the

value of PORTA is moved to W and then ANDed with 0x03,

all of the bits except

D0 and D1 will be

turned to zero.

To move PORTA into W, the instruction is MOVRW PORTA

To AND W with 0x03, the instruction is ANDW 0x03

So the assembler code is

MOVRW PORTA

ANDW 0x03

Generating a very short time delay

This is usually achieved by repeatedly using the NOP instruction. Each NOP instruction takes 1µs to execute and so

NOP

NOP

NOP

NOP

NOP

generates a time delay of 5µs

Generating a time delay

It is wasteful of memory to produce time delays longer than a few microseconds using the technique above. The microcontroller has special facilities to achieve longer time delays which are based on two special registers, the prescaler register, PRE, and the timer register, TMR. They can be thought of as being arranged as below.

PRE is used to divide the clock frequency by a ratio that can be programmed into it. If PRE has the value of 1, then the clock frequency is divided by 2, if PRE has a value of 2, then the clock frequency is divided by 3, etc. In general the clock frequency is divided by PRE + 1.

The maximum value that the clock frequency can be divided by is 256.

If PRE is set to 0, then the timing functions are disabled.

The operation of PRE and TMR is independent of what ever else the microcontroller is doing, so the microcontroller can be carrying out other tasks while awaiting for the delay to occur.

The value in the TMR register decreases by 1 on each falling edge of the pulses from PRE. To obtain a particular time delay, the values to load into PRE and TMR need to be carefully chosen. Often there is more than one set of values that will achieve the delay.

E.g. A delay of 2ms is needed, and the microcontroller has a clock frequency of 1MHz.

One way of achieving this delay is to load PRE with 7 (0x07), which will then divide the clock frequency by 8, so giving a period of 8µs. If TMR is then loaded with 250 (0xFA), it will decrease by one every 8µs and so will reach 0 after 2ms and then set bit 1 of the status register, SR.

The same

result could also be achieved by loading PRE with 124 (0x7C)

and TMR

with 16 (0x10).

The following assembler instructions are used to load PRE and TMR with the first set of values:-

MOVW 0x07 // Move 7 (0x07) into W

MOVWR PRE // Move the contents of W into PRE

MOVW 0xFA // Move 250 (0xFA) into W

MOVWR TMR // Move the contents of W into TMR

To detect when TMR reaches zero, bit 1 of SR can be polled by the microcontroller.

This can be achieved by:-

moving SR into W,

ANDing W with 0x02 to mask all but bit 1

Jumping back to the start if the zero flag is set.

When the time period has finished, bit 1 will be 1 and so the jump will not occur.

So the full assembly instructions to achieve a 2ms delay are:-

MOVW 0x07 // Move 7 (0x07) into W

MOVWR PRE // Move the contents of W into PRE

MOVW 0xFA // Move 250 (0xFA) into W

MOVWR TMR // Move the contents of W into TMR

loop1: // Label for the start of the checking loop

MOVRW SR // Move the contents of SR into W

ANDW 0x02 // AND the contents of W with 2

JPZ loop1 // Jump to loop1 if the zero flag is not set, i.e. TMR is not zero

It should be noted that the time delay from this program will be slightly longer than 2ms.

It takes 4µs to initially set up the PRE and TMR registers and there is an error of between 4 and 7µs from the last three lines of code depending on when exactly TMR becomes zero. For accurate timing these errors need to be considered and it would be more accurate to set TMR to 249 instead of 250 which would then give an error of between 0 and 3µs.

Generating a long time delay

The maximum delay that can be achieved with a 1MHz clock and PRE and TMR both set with 255 is approximately 65.5ms.

To achieve longer delays still it is necessary to use additional registers as counters.

The example below uses the 2ms delay code above to give a delay of 0.5s (500ms). A register with an address which will not interfere with either the stack or the program is chosen as an additional timing register. In this example the register at 0xA0 will be used.

To achieve a 500ms delay requires 250 loops of the 2ms delay, so the register 0xA0 is preloaded with 250 (0xFA) which is decreased by one on each loop until it reaches zero.

The assembly instructions for a 500ms delay are:-

// Set the value for PRE. Since this is not changed by the

// program it only needs to be set once.

MOVW 0x07 // Move 7 (0x07) into W

MOVWR PRE // Move the contents of W into PRE

// Set the value for the timing register 0xA0

MOVW 0xFA // Move 250 (0xFA) into W

MOVWR 0xA0 // Move the contents of W into 0xA0

// The 2ms delay

loop2: // Label for the 2ms delay

MOVW 0xF9 // Move 249 (0xF9) into W (corrected for timing errors)

MOVWR TMR // Move the contents of W into TMR

loop1: // Label for the start of the checking loop

MOVRW SR // Move the contents of SR into W

ANDW 0x02 // AND the contents of W with 2

JPZ loop1 // Jump to loop1 if the zero flag is not set, i.e. TMR is not zero

// Every 2ms the timing register value is decreased by 1

DEC 0xA0 // Decrement the value in register 0xA0

// Check if the value is zero.

JPZ end // Jump to end if the zero flag is set

JMP loop2 // Jump to loop2 if the zero flag is not set, i.e. (0xA0) is not zero

end: // label for the end of the timing loops

Again there will be timing errors which can be minimised by adjusting the value in TMR.

Even longer time delays can be obtained by having multiple additional timing registers cascaded together.

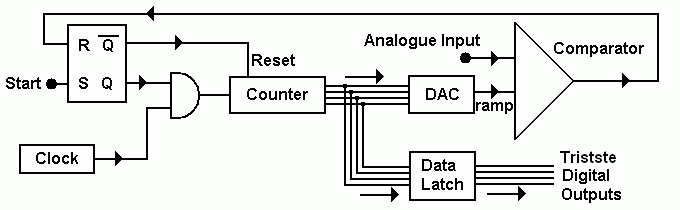

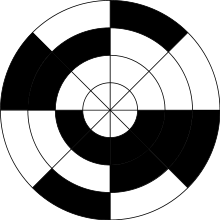

Digital Ramp ADC

The output of a binary counter is connected to a DAC.

This produces a ramp wave.

A comparator is used to compare the ramp with the signal to be converted.

When the comparator changes state, the binary data is latched.

In this way the analogue input is converted to binary numbers.

The diagram below shows a 4 bit ADC. 8, 12 and 16 bit converters are

more common.

|

8 |

4 |

2 |

1 |

|

|

0 |

0 |

0 |

0 |

0 |

|

0 |

0 |

0 |

1 |

1 |

|

0 |

0 |

1 |

0 |

2 |

|

0 |

0 |

1 |

1 |

3 |

|

0 |

1 |

0 |

0 |

4 |

|

0 |

1 |

0 |

1 |

5 |

|

0 |

1 |

1 |

0 |

6 |

|

0 |

1 |

1 |

1 |

7 |

|

1 |

0 |

0 |

0 |

8 |

|

1 |

0 |

0 |

1 |

9 |

|

1 |

0 |

1 |

0 |

10 |

|

1 |

0 |

1 |

1 |

11 |

|

1 |

1 |

0 |

0 |

12 |

|

1 |

1 |

0 |

1 |

13 |

|

1 |

1 |

1 |

0 |

14 |

|

1 |

1 |

1 |

1 |

15 |

The ramp is this way up because the summing amplifier used in the DAC is also an inverting amplifier.

This type of ADC is fairly slow (but cheap and simple).

It is ideal for data that changes fairly slowly such as vehicle or aircraft control systems.

Audio signals are also slow enough to be converted.

To convert video, a flash ADC is needed.

The diagram below shows how an 8-bit ADC could be constructed with a microcontroller.

It also shows a

typical analogue input interface, which limits the input voltage

range to

-0.6V to 12V.

PORTA of the microcontroller supplies the DAC with the 8-bit digital input. Bit D0 of PORTB detects when the output of the comparator changes. When the output voltage from the DAC exceeds the analogue input voltage, the comparator output goes high. The 10kΩ resistor and 1N4148 diode protect the base of the transistor by limiting the base current and also stops the input becoming negative. With the output of the comparator high, the D0 input to PORTB will be logic 0.

The conversion is started by making SC logic 0.

A possible control program for the microcontroller is shown below:

// Set all bits of PORTA to be outputs

MOVW 0x00 // Move 0 into W

MOVWR TRISA // Move the contents of W to TRISA

// Set PORTB so that D0 and D1 are inputs

MOVW 0x03 // Move 3 into W

MOVWR TRISB // Move the contents of W to TRISB

// Check to see if SC is logic 0

start: // Label for loop to check if SC is logic 0

MOVRW PORTB // Move the contents of PORTB to W

// Mask all but bit D1

ANDW 0x02 // AND W with 2

JPZ main // Go to the main program if SC is logic 0

JMP start // Repeat the loop to check if SC is logic 0

main: // Label for the beginning of the main conversion program

// Set the input of the DAC to 0 by setting PORTA to 0

MOVW 0x00 // Move 0 to W

MOVWR PORTA // Move the contents of W to PORTA

loop1: //

Label for the beginning of the loop to check if the

//

conversion is complete

// Check to see if D0 is logic 0, i.e. conversion complete

MOVRW PORTB // Move the contents of PORTB to W

// Mask all but bit D0

ANDW 0x01 // AND W with 1

JPZ value // Go to the ‘end of conversions’ if D0 is logic 0

INC PORTA // Increase the value in PORTA by 1

// If

the analogue input voltage is outside the conversion

// range

then the conversion will never occur.

// It

is therefore important to check that the value in

// PORTA

does not exceed 255.

JPZ

error // If PORTA exceeds 255 and becomes 0 then the

//

zero flag is set and control is passed to an error

// handling

subroutine stored elsewhere in memory

CALL delay // It may be necessary to slow down the conversion

// process so that the DAC has time to reach a steady output.

// Delay is a subroutine elsewhere in memory.

JMP loop1 // Repeat the conversion loop

value: // Label for the end of conversions

MOVRW PORTA // Move the digital equivalent of the analogue voltage to W

Flash ADC

Illustrated is a 3-bit flash ADC with resolution 1 volt (after Tocci). The resistor net and comparators provide an input to the combinational logic circuit, so the conversion time is just the propagation delay through the network - it is not limited by the clock rate or some convergence sequence. It is the fastest type of ADC available, but requires a comparator for each value of output (63 for 6-bit, 255 for 8-bit, etc.) Such ADCs are available in IC form up to 8-bit and 10-bit flash ADCs (1023 comparators) are planned. The encoder logic executes a truth table to convert the ladder of inputs to the binary number output

To calculate the resolution of the ADC ...

The ADC below detects four different input levels

The resolution is Vref / 4

An 8 bit ADC can resolve 28 levels = 256 levels so the resolution is Vref / 256

To calculate the number of comparators (or exclusive OR gates) needed ...

2N - 1 comparators are needed where N is the number of bits in the output.

In the example below, there is a two bit output so 22 - 1 = 3 comparators needed.

For a four bit converter, 24 - 1 = 15 comparators needed.

For an 8 bit converter, 28 - 1 = 255 comparators needed. (hard to implement)

For a 16 bit converter, 216 - 1 = 65535 comparators needed. (Some CPU processors have millions of gates so this is entirely possible.)

This is a two bit flash ADC (not very useful!) 8 or 16 bits would be much more use but also very much more complex.

The circuit above has three comparators.

Each comparator is fed a proportion of the reference voltage from Vref.

If the input voltage (Vin) is too low, all the comparators will be turned off.

If Vin is a little higher, only the bottom comparator will turn on.

If Vin is a little high still, the bottom two comparators will turn on.

If Vin is high enough, all the comparators will turn on.

If all the comparators are off, the output will be 0 0. This is zero in binary.

If the bottom comparator is on, the output will be 0 1. This is one in binary.

If the bottom two comparators are on, the output will be 1 0. This is two in binary.

If all the comparators are on, the output will be 1 1. This is three in binary.

Adding extra bits is simple. More comparators are needed and the output logic gets more complex too.

Also called the parallel A/D converter, this circuit is the simplest to understand. It is formed of a series of comparators, each one comparing the input signal to a unique reference voltage. The comparator outputs connect to the inputs of a priority encoder circuit, which then produces a binary output. The following illustration shows a 3-bit flash ADC circuit:

Vref is a stable reference voltage provided by a precision voltage regulator as part of the converter circuit, not shown in the schematic. As the analog input voltage exceeds the reference voltage at each comparator, the comparator outputs will sequentially saturate to a high state. The priority encoder generates a binary number based on the highest-order active input, ignoring all other active inputs.

When operated, the flash ADC produces an output that looks something like this:

Due to the nature of the sequential comparator output states (each comparator saturating "high" in sequence from lowest to highest), the same "highest-order-input selection" effect may be realized through a set of Exclusive-OR gates, allowing the use of a simpl encoder:

And, of course, the encoder circuit itself can be made from a matrix of diodes, demonstrating just how simply this converter design may be constructed:

While there are standard ICs that will perform the 8 line to 3 bit encoding (e.g. 74HC148) it is a useful exercise to consider how this could be achieved using normal logic gates.

The complete output is shown in the truth table below.

|

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

|

D0 |

D1 |

D2 |

|

|

0 |

0 |

0 |

0 |

0 |

0 |

0 |

|

0 |

0 |

0 |

|

|

1 |

0 |

0 |

0 |

0 |

0 |

0 |

|

1 |

0 |

0 |

|

|

1 |

1 |

0 |

0 |

0 |

0 |

0 |

|

0 |

1 |

0 |

|

|

1 |

1 |

1 |

0 |

0 |

0 |

0 |

|

1 |

1 |

0 |

|

|

1 |

1 |

1 |

1 |

0 |

0 |

0 |

|

0 |

0 |

1 |

|

|

1 |

1 |

1 |

1 |

1 |

0 |

0 |

|

1 |

0 |

1 |

|

|

1 |

1 |

1 |

1 |

1 |

1 |

0 |

|

0 |

1 |

1 |

|

|

1 |

1 |

1 |

1 |

1 |

1 |

1 |

|

1 |

1 |

1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Each output needs its own encoder. Consider D0. It needs to be logic 1 when:

Op-amp 7 output is logic 1,

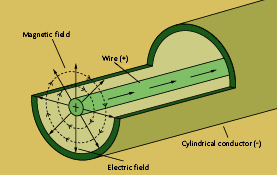

Op-amp 5 output is logic 1 and op-amp 6 output is logic 0,